Jonas Helming, Maximilian Koegel and Philip Langer co-lead EclipseSource, specializing in consulting and engineering innovative, customized tools and IDEs, with a strong …

Introducing Anthropics's Model Context Protocol (MCP) for AI-Powered Tools in Theia AI and the Theia IDE

December 19, 2024 | 6 min ReadWe are excited to announce that Theia AI and the AI-powered Theia IDE now integrate Anthropics’s Model Context Protocol (MCP), enhancing the capabilities of Theia-based, AI-powered tools and IDE with a huge ecosystem of available context sources and functions. Do you want to enable the AI assistants in your tool or IDE to search the web, access Github, read and write to databases or communicate via Slack? MCP in Theia AI gives you all of this and more for free!

In case you don’t know Theia AI or the AI-powered Theia IDE, visit the Theia AI introduction and the AI Theia IDE overview, or download the AI-powered Theia IDE here.

What is MCP?

The Model Context Protocol is an open standard that enables developers to build secure, two-way connections between external systems and AI-powered tools, replacing fragmented integrations with a single protocol.

The idea is similar to the popular Language Server Protocol (LSP): You can implement so-called MCP servers that provide a specific feature set, e.g. access to a local file system or web search. AI-powered tools that want to integrate these servers only have to “speak” the MCP protocol and can therefore augment their feature set very easily by installing new MCP servers.

A standout feature of MCP is its support for functions, which allows AI systems to perform specific tasks by interfacing directly with external tools and data sources. This functionality is particularly beneficial for AI-powered tools and IDEs, as it enables seamless integration with various services. As MCP also makes a detailed description of the available functions accessible to the AI, you don’t have to implement any MCP-server-specific integration. You can basically provide capabilities to the LLM, just by providing the functions and their definitions.

As this all might sound a bit abstract, let’s look at the integration in the Theia IDE and two examples, so that you get a very concrete idea on what advantages MCP provides.

Integration into Theia AI and Theia IDE

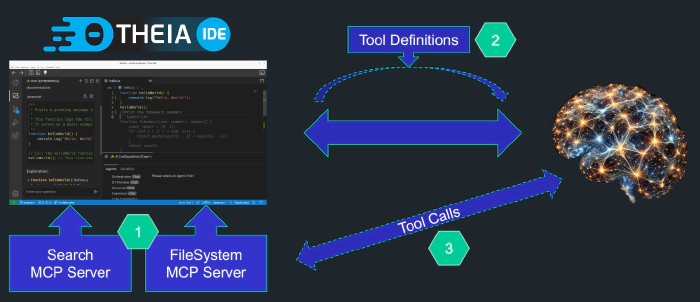

With MCP integrated into Theia AI, and consequently the AI-powered Theia IDE, users can now configure and utilize MCP servers within their development environment. Let’s look at a concrete example: We will enable a chat assistant in the Theia IDE to search the web and incorporate the results of the web search via a MCP server.

The diagram below shows the configuration workflow. First (1) we add a new MCP server to the Theia IDE. The Theia IDE will read the capabilities of the MCP server, in this case a function to conduct a search. Now Theia AI can offer this function as a tool function to the underlying LLM (2). The provided functions come with a description and a schema, so the LLM can decide on when to call such a function and also knows how to call it. As soon as the LLM needs to use the capability, it will trigger a tool call for a function provided by a MCP server (3).

Theia AI and the AI-powered Theia IDE integrate the Model Context protocol (MCP)

Theia AI and the AI-powered Theia IDE integrate the Model Context protocol (MCP)Let’s look at this workflow for the concrete example of the “web search” capability. There is an existing MCP server “brave-search” providing local and web search as functions. We can easily add it via the following user settings. As this server is provided as a node package, it can be automatically downloaded on first start (see more details in Theia’s documentation)

{

"ai-features.mcp.mcpServers": {

"brave-search": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-brave-search"

],

"env": {

"BRAVE_API_KEY": "YOUR_API_KEY"

}

}

},

...

}

Now the functions provided by the MCP server are available for Theia AI. The only remaining question is how do we tell LLMs which functions are available? Here it comes in handy that Theia AI allows to specify which tool functions are available for agents and their LLMs via the user-configurable prompt. Theia AI will pick up all specified tool functions and make them available to the LLM.

As shown in the following video, we only need to add a tool function to the system prompt of an agent. In this example, we talk to an agent (chat on the left side) with an empty system prompt (on the right site). We ask about recent information that the underlying LLM does not know from its training data. It hallucinates, as it does not know the answer.

Then we modify the prompt of the agent (editor on the right side) and simply add the function provided via MCP. We hereby enable the underlying LLM to search the internet. As the function describes itself, we do not need to explain anything in addition. In the second request, we can observe that the underlying LLM now decides to search the internet for the answer and comes up with an accurate answer.

The Theia IDE does not yet include MCP servers in any standard prompts. However, it already allows end users to add capabilities of the MCP ecosystem themselves as shown above. In the future, we plan to provide ready-to-use prompts using MCP servers and support auto-starting configured servers.

The available ecosystem of MCP Servers Repository unlocks endless possibilities, especially as you can very easily combine the capabilities of several servers into advanced workflows. In the following example, we use three MCP servers:

A Memory Graph: Allowing the LLM to capture knowledge, e.g. about the user, their task or other important information

Web Search Integration: Enabling the LLM to search the internet (as before)

Filesystem Operations: Allows the LLM to interact with the local file system, e.g. to create files (in restricted directories)

As demonstrated in the video below, we first tell the LLM something about our work, i.e., that we are working on the integration of the MCP protocol. The LLM then uses the provided knowledge graph to “remember” this information in a structured way.

We then open a new chat. This means, the LLM has no knowledge of the previous conversation, except of course the knowledge it recorded in the memory MCP server. In this new chat, we ask to research on “the topic we are currently working on”. The LLM retrieves the knowledge of what we are working on and searches the internet. Finally, because we told it to, it puts the results directly into a file using the file system MCP server.

Conclusion

This integration marks a significant step forward in creating more connected and efficient AI-assisted development environments. We encourage users of Theia to explore these new capabilities and look forward to seeing the innovative applications that emerge. To explore available MCP servers and their functionalities, visit the MCP Servers Repository. As you have seen, you can very easily integrate them into the Theia IDE and explore their capabilities. If you find something useful, please consider sharing your workflows with the community by creating a discussion!

To learn more about the AI-powered Theia IDE and Theia AI, visit the Theia AI introduction and the Theia IDE overview, or download the AI-powered Theia IDE here.

If you are interested in building custom AI-powered Tools, EclipseSource provides consulting and implementation services backed by our extensive experience with successful AI tool projects. We also specialize in tailored AI assistance of web- and cloud-based tools and professional support for popular platforms like Eclipse Theia and VS Code.

Get in touch with us to discuss your specific use case.