Jonas Helming, Maximilian Koegel and Philip Langer co-lead EclipseSource, specializing in consulting and engineering innovative, customized tools and IDEs, with a strong …

Theia AI Sneak Preview: Custom Part Renderer and actionable responses

September 27, 2024 | 7 min ReadDo you want to augment your tool or IDE with AI assistance and go beyond just a simple chat interface? Do you want to assist users with actual workflows and boost their efficiency by making AI support actionable, such as with links, buttons, or other interactive UI controls? In this article, we show an example of how you very easily create actionable AI support in your tool with Theia AI, a fully open AI framework for building AI-assistance for tools and IDEs. We will highlight one of the core principles, Tailorability and Extensibility for Tool Builders at the example of an agent for executing arbitrary commands in the Theia IDE.

Theia AI is an open and flexible technology that enables developers and companies to build tailored AI-enhanced custom tools and IDEs. Theia AI significantly simplifies this task by taking care of base features such as LLM access, a customizable chat view, prompt templating and much more, and lets tool developers focus on engineering prompts for their use cases and integrate them seamlessly in Theia’s editors and views, as well as in the tool provider’s custom editors and views. Theia AI is part of the Theia Platform and is ready to be adopted by tool builders wanting to be in full control over their AI solutions. Learn more about the vision of Theia AI.Theia IDE is a modern and open IDE built on the Theia platform. With version 1.54 Theia IDE will integrate experimental AI support based on Theia AI to showcase AI-powered functionalities in a highly customizable, transparent and open setting. Learn more about the Theia IDE.

In a previous article, we have demonstrated how easy it is to extend Theia AI with a custom agent. This agent was able to identify a command in the Theia IDE based on a user question, e.g. “how to toggle the toolbar”. However, the simple agent just provided the user with an answer in the chat, more precisely the command ID. The user still had to execute the command. In this article, we demonstrate how you can make a response from an LLM actionable. In this case, we want to add a button to the chat that can directly execute the command.

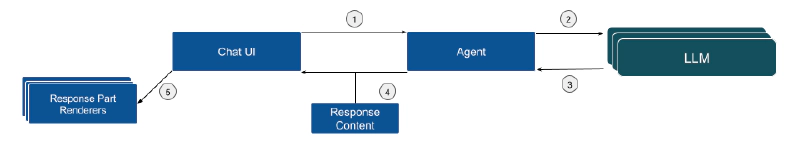

The diagram below shows the basic flow to implement transforming an LLM response into an actionable UI control in Theia AI. The user starts a request in the default Chat UI, which is sent to the underlying LLM. By default, the agent could just forward the response to the Chat UI again as is. In our case, however, we want to augment the Response Content with actionable UI controls. The response content sent to the Chat UI actually consists of one or more response parts, each having a type and dedicated data fields. If we now detect that the LLM response contains an executable command, we want to mark it with a special annotated placeholder for the command in the form of a dedicated response part in the response content. Finally, the Theia AI Chat UI is extensible by so-called Response Part Renderers. Based on the given response parts of the response content sent to the Chat UI, it’ll use the registered response part renderers to show the final result in the UI. In our example, we can now register a specific renderer for response parts representing an executable command. This renderer can show a button and hook up a handler to directly execute the command on click of the button. Let’s quickly implement this!

The first step is typically the most effort and it is actually unrelated to Theia AI: You need to develop a prompt that reliably returns the expected output. In our case to return an executable Theia command that the user is looking for with their question. We want this response in a parsable format, so that we can easily detect the command in the LLM response and provide the user with the option to directly execute the command. Getting such prompts right typically requires a bit of evaluation. A huge help in Theia AI is that you can modify the prompt templates at runtime to test different scenarios and tweak the prompt until you are satisfied. Also, if the underlying LLM supports it, structured output is a huge time safer and guarantees parsable results. We won’t go into detail about prompt engineering, let’s assume we have a simple prompt like this:

You are a service that helps users find commands to execute in an IDE.

You reply with stringified JSON Objects that tell the user which command to execute and its arguments, if any.

Example:

json

{

"type": "theia-command",

"commandId": "workbench.action.selectIconTheme"

}

Here are the known Theia commands:

Begin List:

${command-ids}

End List

For an explanation of the variable command-ids, please refer to our previous article.

As a next step, we parse the response in our agent implementation and transform it into a Response Content. As shown in the code example below, we first parse the response. Second, we check if a corresponding command is existing, using the default Theia API. Finally, we create a new wrapper object, a command chat response part, containing this command that can then be processed by the Chat UI. Please note that for simplicity reasons, the code example does not include any error handling.

const parsedCommand = JSON.parse(jsonString) as ParsedCommand;

const theiaCommand = this.commandRegistry.getCommand(parsedCommand.commandId);

return new CommandChatResponseContentImpl(theiaCommand);

Note that a chat response can contain a list of response parts of arbitrary types. This way, you can mix text or markdown with actionable response parts as needed.

Finally, to show a button in our chat, we create a new Response Part Renderer. As shown in the following code example, we register our Response Part Handler to be responsible for CommandChatResponseContent (see #canHandle). This will make sure the Chat UI call our renderer, when a corresponding content is part of the response. Based on this, we render a simple button (see #render) that executes the corresponding command.

canHandle(response: ChatResponseContent): number {

if (isCommandChatResponseContent(response)) {

return 10;

}

return -1;

}

render(response: CommandChatResponseContent): ReactNode {

const isCommandEnabled = this.commandRegistry.isEnabled(response.command.id);

return (

isCommandEnabled ? (

<button className='theia-button main' onClick={this.onCommand.bind(this)}>{response.command.label}</button>

) : (

<div>The command has the id "{response.command.id}" but it is not executable globally from the Chat window.</div>

)

);

}

private onCommand(arg: AIChatCommandArguments): void {

this.commandService.executeCommand(arg.command.id);

}

This is the result in the Theia IDE:

As we demonstrated, Theia AI makes it very easy to extend the default UI with custom elements, e.g. to make AI responses actionable. The same mechanism can also be used to provide customized widgets for specific content. As an example, the coding widget in the Theia AI provides syntax highlighting and features such as inserting the code into an editor. Other examples are interactive trees, sortable tables, etc.

Please note that extending the Chat UI is by far not the only way supported by Theia AI to integrate AI features in the UX of your tool. Especially in custom, domain-specific tools, you might want to integrate the interaction with agents into custom editors, such as form-based UIs or diagrams. The open and flexible architecture of Theia AI is well suited for this as it is targeted at tool builders. Furthermore, agents can access not only the Theia API, but also custom API, e.g. domain-specific services to augment the communication with the underlying LLM. While a chat has been established as the standard way of communicating with LLMs, we have learned in many customer projects that for many use cases a more direct integration in the user’s workflow can boost efficiency even more!

We will show more showcases demonstrating the core principles of Theia AI within the next few days, see also:

← Previous: Create your own agent | Next: Let your agents talk to each other! →

Stay tuned and follow us on Twitter.

If you want to sponsor the project or use Theia AI to create your own AI solution, please get in contact with us. In particular, we are also looking for LLM providers who want to make their language models available via Theia AI.

EclipseSource is at the forefront of technological innovation, ready to guide and support your AI initiatives based on Theia AI or any other technology. Our comprehensive AI integration services provide the specialized know-how necessary to develop customized, AI-enhanced solutions that elevate your tools and IDEs. Explore how we can assist in integrating AI into your tools with our AI technology services. Reach out to begin your AI integration project with us.